For years, the majority of shadows in video games were pre-calculated and static. Nowadays, as environments become increasingly interactive and animated, the number of dynamic objects greatly increased. In addition, static shadows require a lot of memory, making them unsuitable for huge levels offered by modern open worlds. For these reasons, many recent games such as Battlefield 1, The Legend Of Zelda: Tears of The Kingdom, Horizon Zero Dawn, … have almost exclusively dynamic shadows.

Each game handles shadows differently, depending on the power of the platform and the objective of realism. So there are many ways to render shadows in real time. In this article, we have a look at the most widely used and promising methods.

Light types

In game engines, there are 4 main types of light.

- Directional light: uniformly illuminates the entire level in one direction, often used to simulate sunlight.

- Spotlight: Illuminates a conical area.

- Point light: Illuminates in all directions like a light bulb.

- Area light: Illuminates an area like a screen or neon tube.

It’s possible to generate dynamic shadows with all types of light, but some are more complex than others. Many games are limited to the less expensive ones, directional lights and spotlights. The methods covered here works for these types of light, they sometimes require modifications to work with areas and point lights.

I – Hard Shadows

The first step in shadow gestion is to detect whether a pixel rendered on screen is illuminated or not.

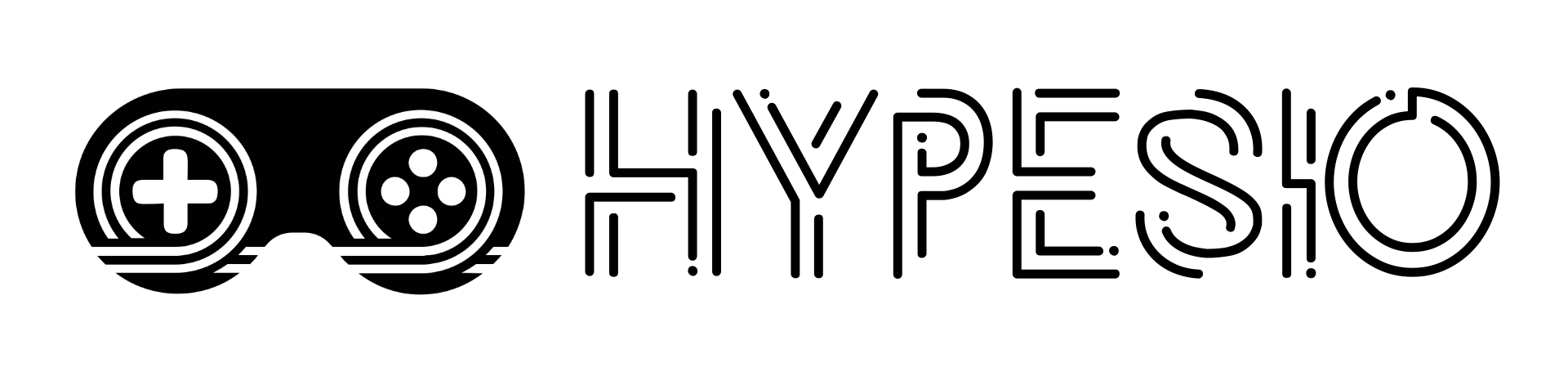

Shadow Map

One of the oldest methods is the shadow map. The idea is to render the scene from the point of view of the light. This rendering will store in each texel (~pixel) of a texture the distance between the light and the nearest object. The result is called a depth map. From this simple texture, it’s possible to tell whether what the main camera sees is in shadow or not.

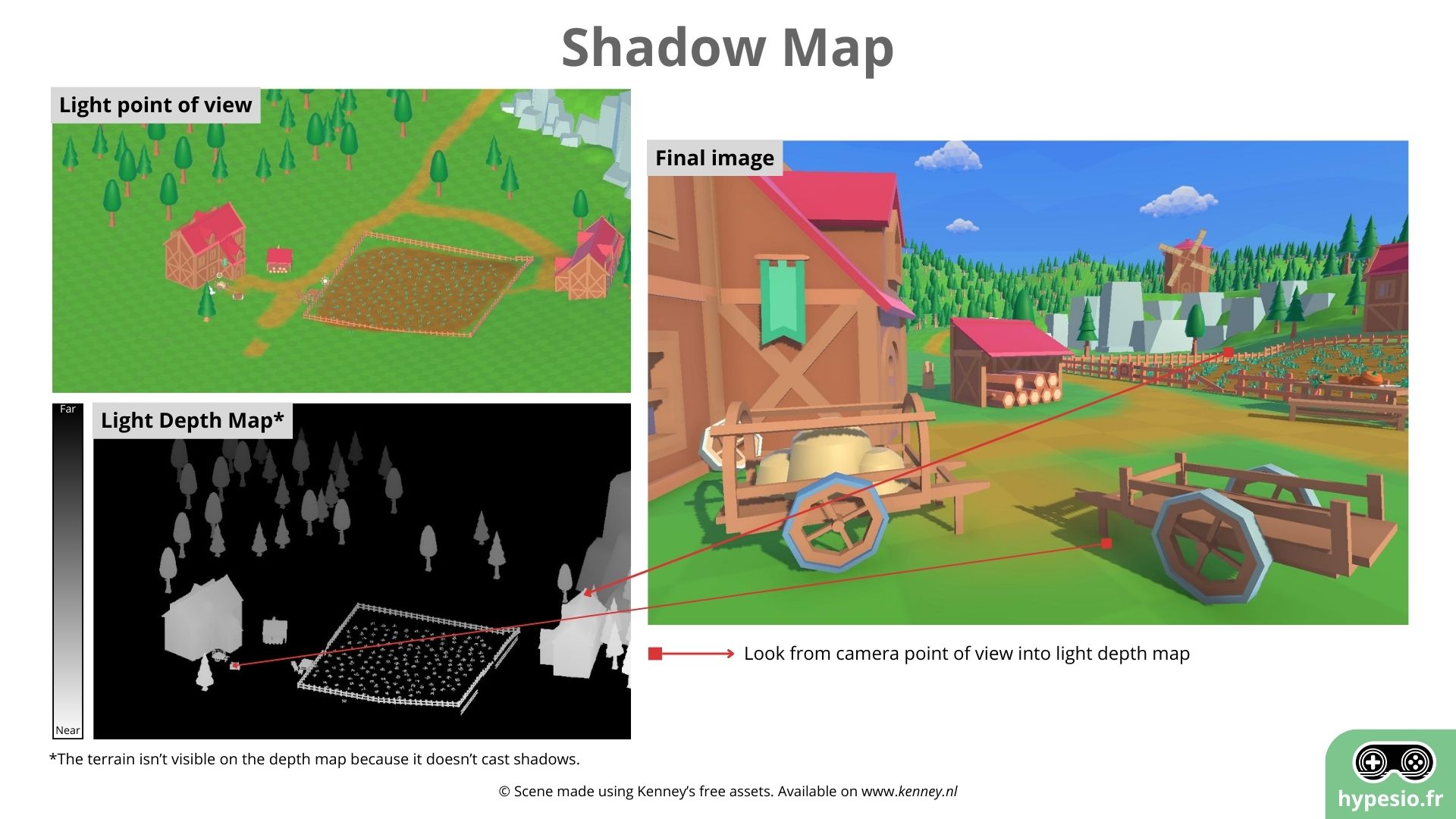

Shadow Cascades

The technique most commonly used today for real-time shadow rendering is an evolution of the shadow map. It is the shadow cascade method (default method in Unity URP & HDRP, Godot Engine and Unreal Engine 4). The idea is to make several shadow renderings, each one larger and larger depending on the distance from the camera. This allows for detailed close-up shadows with limited aliasing (pixelation), and lower-quality shadows in the distance.

Unreal Engine 5 features virtual shadow mapping. A mix between virtual texturing and shadow cascades. Virtual texturing consists in using very large textures (larger than 8k) and sending only the visible parts of the texture to the GPU, to limit the memory impact of its use. With virtual shadow mapping, the world is separated into zones, each with its own shadow map. These are larger or smaller depending on the distance from the player. Shadow maps are stored in a large common texture. To limit the rendering of these zones, shadows are updated only if an object moves in the zone or if the light moves. This technique results in highly detailed shadows and good performance.

Shadow Volumes – Doom 3

Some methods are less widespread, but can be useful in specific cases. This is the case of the shadow volumes method, notably used on Doom 3. The idea here is to extend the mesh triangles in the opposite direction to the light to detect shaded pixels. If a pixel lies between two elongated triangles, then it is in shadow.

To detect shaded pixels, we have to do a render pass only for elongated triangles. In this render pass, each time you render a front face of a triangle, you add +1, and each time you render a back face, -1. During the main render, for each pixel, we just need to look at the value stored in the previous render to know if we’re in shadow. If the value is other than 0, then the face is in shadow, otherwise it is lit. Values can be stored in the stencil buffer. This is the case in Doom 3, for example, where the method is sometimes referred to as stencil shadowing.

This method generates shadows of perfect quality. No aliasing and a fixed memory footprint equal to the screen quality. The problem is the elongation of the triangles, which creates a significant overhead for the GPU in complex scenes. In addition, the technique is limited to strong shadows and is complex to adapt for soft shadows. If you’re interested in the subject, this article in GPU Gems explains the algorithm, its advantages and disadvantages, in detail.

II – Soft Shadows

With shadow maps, we have a strong shadow without penumbra. Games that don’t aim for realism can sometimes get by with this, but for others they need to find a way of softening the shadows. In the real world, an object’s shadow is more or less diffuse depending on its distance from the ground. The base of a tree will cast a sharp shadow, while its branches and leaves will create a more diffuse shadow. This effect not only adds realism, but also provides a better understanding of distances.

Fixed penumbra

The simplest way to soften the edges of a shadow is Percentage-Closer Filtering (PCF). The idea is to look at each pixel rendered on screen, not just at the corresponding texel in the shadow map, but also at those surrounding it. The function describing the area covered and the weight used for each texel is called a kernel or filter. This kernel is used to average the texel values and deduce the shadow intensity. This means that if a pixel to be rendered is on the edge of an object in the depth map, then the shadow intensity obtained will be low. The result is a shadow with soft edges. The size of the penumbra depend on the kernel size. The drawback of this technique is that all objects have a shadow with identical edges, whatever their distance from the light source. But it has the advantage of a fixed computation cost. This simple technique is sufficient for many games.

If we take all the surrounding texels naively, the resulting shadows tend to create block artifacts and remain partially aliased. With this approach, producing a very soft shadow requires a lot of memory access while covering a large area. To improve results, there are various forms of kernels for selecting the surrounding texels in the depth map. Among the most commonly used are poisson-disk and vogel-disk. Adding random rotation to these functions produces a non-repeating pattern that limits visual artifacts. Disk methods are designed to select texels uniformly in a circle around a point. With little sampling, they can cover a large area in the shadow map and achieve very good soft shadows.

Variable penumbra

Percentage-closer filtering has many different evolutions. One of the most widely used is Percentage-Closer Soft Shadows (PCSS). With this method, the distance of the object creating the shadow (blocker) from the place receiving the shadow (receiver) is taken into account. The further a blocker is from the receiver, the larger the kernel need to be to take into account a large area in the shadow map.

To find the blockers influencing the pixel to be rendered, we need to search in the shadow map. The search is performed using the same kernel types as described above. If several blockers are detected, we take the average of their distances to deduce the size of the kernel to be used. With a naive kernel, if a blocker is very far from the receiver, the number of texels taken into account can become very large and increase the computation time.

To avoid that, it’s common to use kernels like poisson-disk or vogel-disk and increase the disk radius according to the receiver/blocker distance. It allows to cover a large area with a fixed number of texture accesses. The result is theoretically less precise, but ensures stable performances.

Analytical Soft Shadow – The Last Of Us

In the case of very diffuse lighting, with no pronounced shadows, the methods described above do not give good results. The solution is to simplify the representation of objects with spheres or capsules. This allows complex calculations to be performed at a reasonable cost. The shadow map is rendered from the point of view of the main camera. The basic idea is to project a cone in the opposite direction of light from each pixel. The larger the part of the cone taken up by one of the analytical shapes, the stronger the shadow is. This approach is ideal for indoor lighting. But the cost is high, and its use is usually limited to a few characters.

This method is used in The Last of Us and is available in Unreal Engine.

III – Improve details – More advanced methods

The previous methods are all based on the shadow map principle and do not, or only partially, correct its flaws. Other, more advanced methods can overcome these problems, but at a cost or with other limitations.

Screen Space Shadows (Contact Shadows)

The flaws of shadow maps include limited quality and petter-panning. The Screen Space Shadows method solves both these problems. The idea here is to deduce the shadow of each pixel from what is visible on the screen. To do this, we need a texture containing the normals visible on screen and a depth texture. Two textures available when doing deferred rendering (pre-rendering of color, normals and depth in different textures. Then, lighting and effects can be calculated on visible pixels only).

Day’s Gone uses contact shadows to complement shadows cascades. The effect is particularly noticeable on small objects and distant shadows. This adds depth to the forest and relief.

Frustum Tracing Shadows

To overcome the problem of shadow map accuracy, there’s the Frustum Tracing Shadows (FTS) technique. As with shadow maps, this involves rendering from the point of view of the light. This time, instead of storing a depth for each pixel, we’ll store a frustum for each triangle visible to the light. A frustum is a pyramidal shape that tells you the area covered by the triangle. In the lighting render pass, to find out if a pixel is in shadow, we need to detect if it’s in one of the frustums created previously. This method requires a lot of computation, but it generates sharp shadows of perfect quality.

Deep Shadow Maps

The many methods seen above all have a common flaw. They only manage objects that completely block light. They don’t take into account volumetric objects (clouds, dust…), transparent objects and poorly manage the shadows of dense objects with a thickness less than a pixel (hair, fur…). One way of dealing with this is to use the Deep Shadow Maps popularized by Pixar in 2000. As with shadow maps, this involves rendering from the point of view of light. But instead of storing a depth, we’ll store a function describing light intensity as a function of distance. To do this, from each pixel we’ll fire a ray forward. At regular intervals, we watch how much light is absorbed. Once the maximum distance has been reached, we take the information gathered and approximate it in the form of a function.

Conclusion

Choosing the right way to manage shadows is an important step that can greatly influence the visual result and performance of a game. The majority of recent games use shadow cascades with penumbra management via PCF or PCSS. And more and more AAA games are supplementing their shadows with contact shadows. But real-time dynamic shadow rendering is still an active research topic where new techniques are regularly published. Here, I’ve simply scratched the surface of the subject and focus only on the techniques that seem most important to me. I have deliberately omitted raytraced shadows. I’ll talk about this in a future article dedicated to raytracing.

This article is part of the Real-Time Techs series dedicated to popularizing real-time rendering and simulation methods used in video games. Don't miss any future articles: Twitter / Mastodon / Bluesky.

Sources and more:

🗣️ Conférence | 👁️🗨️ PPT | 👨💻 Code | 📄 Papier

🗣️ Advanced Geometrically Correct Shadows for modern games engine, Chris Wyman, Nvidia, 2016 – The Division

🗣️ Moment Shadow Mapping, Christoph Peters, 2016

👁️🗨️ Inside Bend: Screen Space Shadows, Graham Aldridge, Bend Studio, 2023 – Day’s Gone

👁️🗨️ The Last of Us Lighting, Michał Iwanicki, Naughty Dog, 2013

👁️🗨️ Advanced Soft Shadow Mapping Techniques, Nvidia, 2008

👨💻 Integrating Realistic Soft Shadows into Your Game Engine (PCSS Implementation), Nvidia, 2008

👨💻 ShadowFX – Open Source Library, AMD, 2018

📄 Shadow Silhouette Maps, Stanford University, 2003

📄 Rendering Fake Soft Shadows with Smoothies, Eric Chan and Fredo Durand, MIT, 2003

📄 Percentage-Closer Soft Shadows, Randima Fernando, Nvidia, 2005

📄 Deep Shadow Maps, Tom Lokovic and Eric Veach, Pixar, 2000

📄 Realistic Soft Shadows by Penumbra-Wedges Blending, Vincent Forest, Toulouse University, 2006

📄 Volumetric Shadow Mapping, Pascal Gautron, Jean-Eudes Marvie and Guillaume François, 2009